World Environment Day: Generative AI can write poems, crack jokes, diagnose diseases, and maybe even steal your job — but it can’t do any of that without a small country’s worth of electricity and a swimming pool of water. Welcome to the age of intelligent machines with very dumb environmental habits.

A new report by IMD Business School and a sobering MIT investigation pull back the curtain on tech’s best-kept secret: the AI boom is running on fossil-fueled fumes and water-thirsty data centres. If you’re picturing a sleek “cloud,” think again. The reality looks more like a grid-hogging, water-chugging beast in a server farm near you.

Google Can Talk to You, But First It Has to Out-Eat Austria

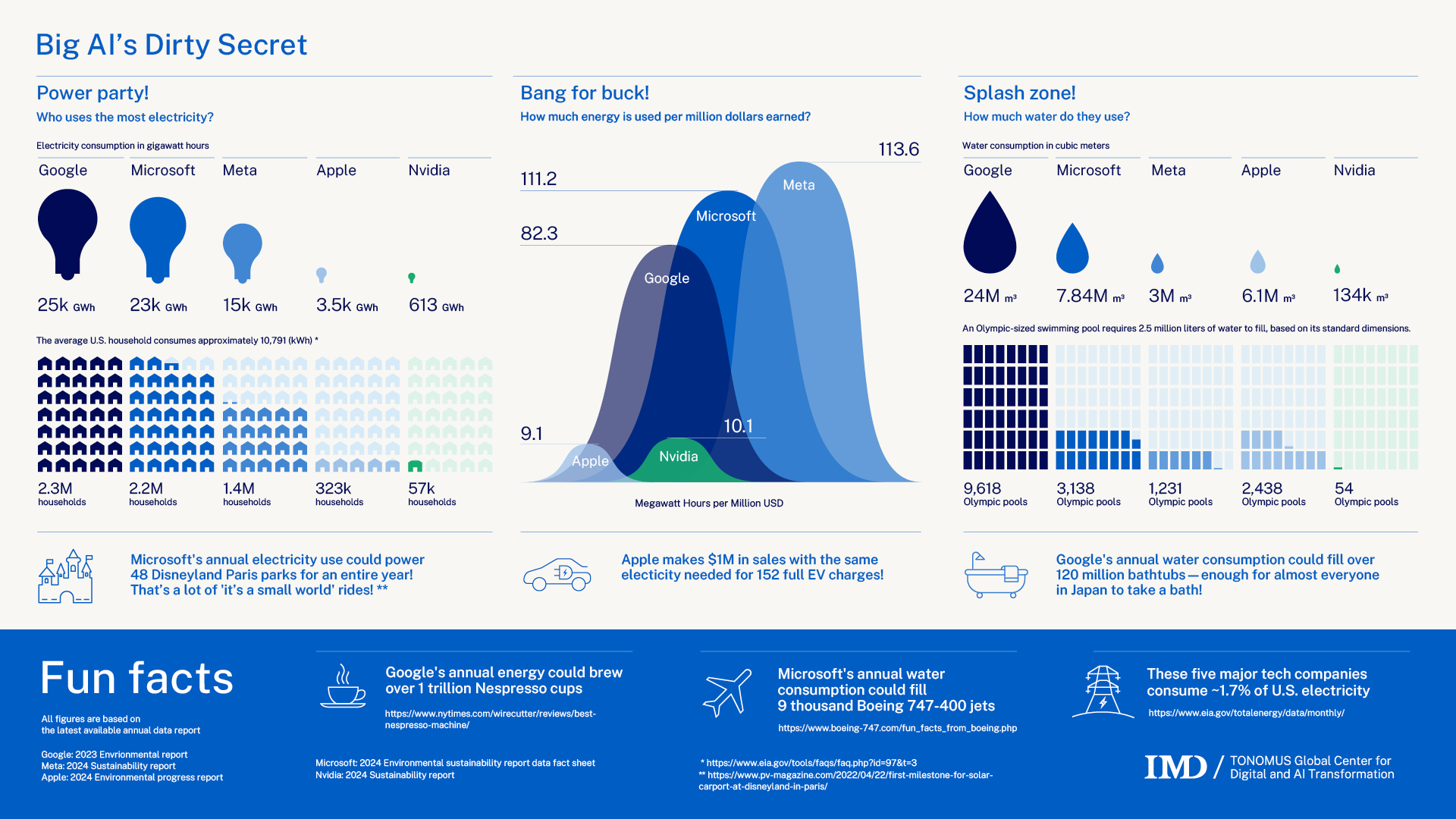

Let’s start with the biggest offenders. According to IMD, Google leads the AI gluttony chart with a whopping 25 terawatt-hours (TWh) of energy consumed annually. That’s more than 2.3 million American households combined. Microsoft trails not-so-far behind at 23 TWh — enough to keep 48 Disneyland Paris parks lit for a year. Meta, Apple, and Nvidia round out the top five, with their own multi-gigawatt hangovers.

These aren’t just numbers. They’re giant, humming data centres swallowing energy faster than you can say “ChatGPT.” And they’re multiplying, thanks to our obsession with smarter models that now require more power than your entire neighbourhood ever will.

As MIT’s Noman Bashir puts it, “What is different about generative AI is the power density it requires… a generative AI training cluster might consume seven or eight times more energy than a typical computing workload.”

Efficiency? Meta Missed the Memo

You’d think that companies minting billions would at least do it cleanly. Not quite.

Meta is the least energy-efficient of the bunch, guzzling 113.6 megawatt-hours (MWh) per million dollars earned. Microsoft isn’t much better at 111.2 MWh, with Google clocking in at 82.3 MWh. In contrast, Apple and Nvidia sip their juice like civilised billionaires — using just 9.1 and 10.1 MWh, respectively.

To put that into perspective: Apple can make a million bucks using the same amount of electricity it takes to charge 152 Teslas. Meta needs a power station and maybe a few guilt trips.

It’s Not Just Hot Air — AI Is Thirsty Too

If AI models were people, they’d be banned from your bathroom for overusing water. These algorithms aren’t just power-hungry — they’re ridiculously thirsty.

Google again tops the list, using 24 million cubic meters of water annually to cool its AI hardware. That’s over 120 million bathtubs full. Microsoft chugs 7.8 million, Apple 6.1 million, Meta 3 million, and Nvidia, the relatively parched one, still uses 134,000 cubic meters.

“Just because this is called ‘cloud computing’ doesn’t mean the hardware lives in the cloud,” Bashir reminds us. “Data centers are present in our physical world, and because of their water usage they have direct and indirect implications for biodiversity.”

The Carbon Code Nobody Wants to Read

MIT researchers estimate that training a model like GPT-3 eats up 1,287 MWh of energy, roughly what 120 average US homes consume in a year. That translates into 552 tons of CO₂ emissions… before you even ask it to write your grocery list.

Worse, AI models are disposable now. Companies roll out newer, bigger, hungrier versions every few weeks. The electricity used to train older models? Wasted. All in the name of faster responses and fancier outputs.

And while we’re all happily typing into chatbots, each AI prompt we send guzzles up to five times the power of a regular Google search. But hey — summarising your boss’s long email is worth melting a few glaciers, right?

What’s Next: Smarter AI or a Dumber Planet?

The five tech giants at the centre of this AI storm — Google, Microsoft, Meta, Apple, and Nvidia — already account for 1.7% of total US electricity consumption, roughly equal to Austria’s. Let that sink in.

Yes, they’re investing in renewables. Yes, there’s talk of better cooling systems. But as MIT’s Elsa Olivetti warns, “There are much broader consequences that go out to a system level and persist based on actions that we take.”

Translation: we’re not just training AI; we’re training it to destroy the environment unless we hit pause.

So the next time you’re impressed by a chatbot composing Shakespeare in Klingon or generating a talking avocado, remember: behind that magic is a very real, very sweaty server somewhere, guzzling electricity and drinking the town dry.

Welcome to the age of AI. It’s brilliant. It’s transformative. And it’s very, very thirsty.